Abstract Imitation learning-based robot control policies are enjoying renewed interest in video-based robotics. However, it remains unclear whether this approach applies to X-ray-guided procedures, such as spine instrumentation. This is because interpretation of multi-view X-rays is complex. We examine opportunities and challenges for imitation policy learning in bi-plane-guided cannula insertion.

We develop an in silico sandbox for scalable, automated simulation of X-ray-guided spine procedures with a high degree of realism. We curate a dataset of correct trajectories and corresponding bi-planar X-ray sequences that emulate the stepwise alignment of providers. We then train imitation learning policies for planning and open-loop control that iteratively align a cannula solely based on visual information. This precisely controlled setup offers insights into limitations and capabilities of this method.

Our policy succeeded on the first attempt in 68.5% of cases, maintaining safe intra-pedicular trajectories across diverse vertebral levels. The policy generalized to complex anatomy, including fractures, and remained robust to varied initializations. Rollouts on real bi-planar X-rays further suggest that the model can produce plausible trajectories, despite training exclusively in simulation.

While these preliminary results are promising, we also identify limitations, especially in entry point precision. Full closed-look control will require additional considerations around how to provide sufficiently frequent feedback. With more robust priors and domain knowledge, such models may provide a foundation for future efforts toward lightweight and CT-free robotic intra-operative spinal navigation.

Policy rollout on real X-Ray Images using an overlay of the simulated cannula

Figures

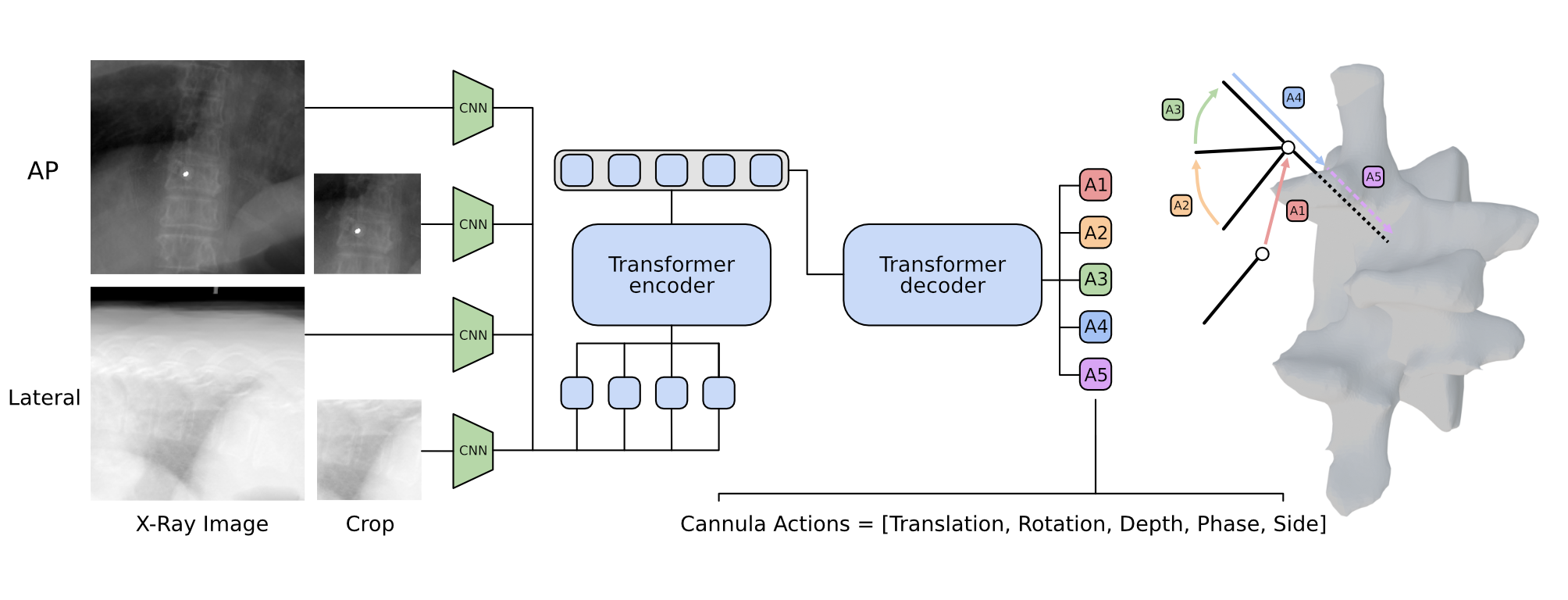

Overview. Left to right: Inputs consisting of current AP and lateral observations are processed via a conditional variational autoencoder. Fine-grain pose adjustments for cannula are predicted as actions to generate final insertion trajectory while modeling surgeon like adjustments.

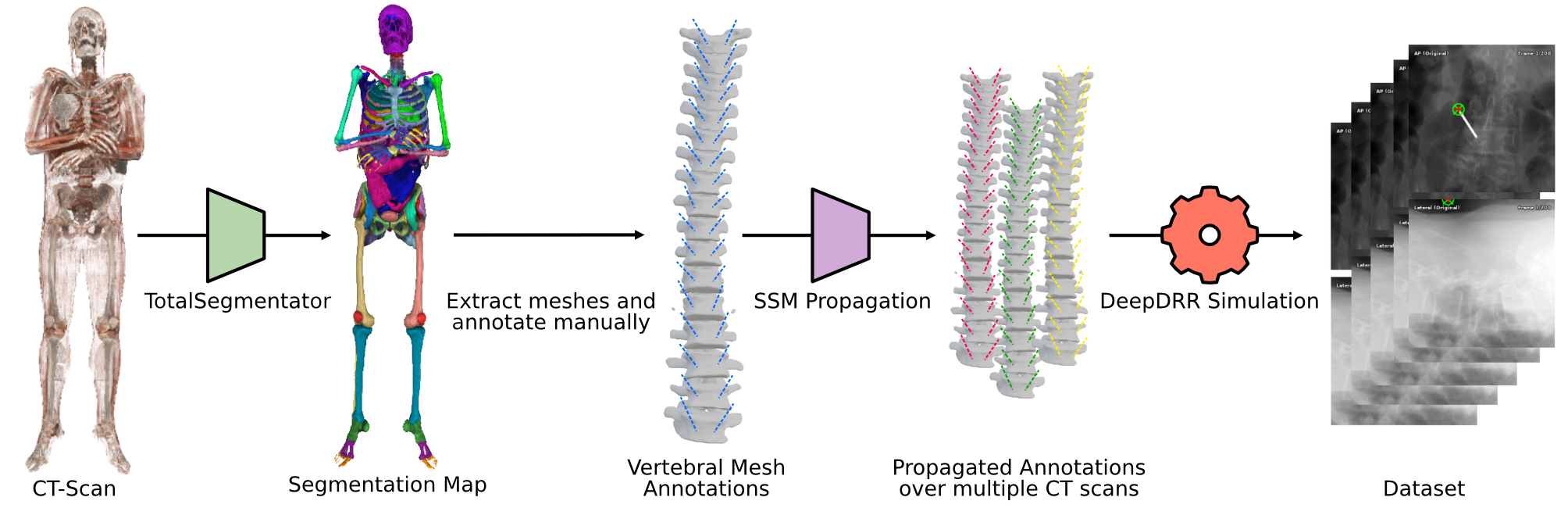

Dataset Generation Pipeline. Left to right: CT-scans from the NMDID dataset are preprocessed using TotalSegmentator. Then representative Statistical Shape Models are extracted, manually annotated and propagated over multiple CT scans. Lastly annotations are simulated via DeepDRR to generate our training data.

Method

To enable surgeon-like spinal trajectory planning directly from bi-planar X-rays we train a transformer-based imitation learning policy using an incremental action representation that predicts cannula adjustments from AP and lateral radiographs. A high-level overview of the model and our approach can be found above. The large-scale annotated training data required to train such a model is generated by constructing a realistic simulation environment with automatically derived safe trajectories. The data generation pipeline consists of three main components: (1) preprocessing of clinical CT data and segmentation, (2) generation and filtering of safe trajectories, (3) simulation of bi-planar radiographs, with the full pipeline being illustrated in the figures above. After our data is generated we train a transformer-based imitation learning policy using an incremental action representation that predicts cannula adjustments from AP and lateral radiographs.Citation

Klitzner, F., Inigo, B., Killeen, B. D., Seenivasan, L., Song, M., Krieger, A., & Unberath, M. (2025).

Investigating Robot Control Policy Learning for Autonomous X-ray-guided Spine Procedures.

arXiv:2511.03882.